How PMs can use AI tools to analyze customer feedback

+ Ideas for Product Managers to add value to their team in their first 30 days

Hey BPL fam,

Here’s what we have in today’s edition:

Quick survey for an in-person community meetup

How to use AI tools to analyze customer feedback

Ideas for a new PM in the first 30 days on the job

Without further ado, let’s dive in.

Interested in a community meetup?

I was reviewing my schedule the other day and realized I’ll be in 4 different cities in the next 90 days namely:

Vancouver, Canada

Seattle, United States

Toronto, Canada

Lahore, Pakistan

I thought this might be an opportunity to get out and meet the fabulous community that reads Behind Product Lines.

Please fill out this survey to register your interest.

If there is sufficient interest in meeting up in a specific city, I’ll email you the details beforehand.

How to use AI tools to analyze customer feedback

At vFairs, we get a lot of customer feedback from different sources: G2, Capterra, Customer surveys, emails, Gong and so on.

In the past, we’d consolidate all the feedback either on a “Voice of Customer” feedback channel on Slack or get a team of review analysts go through the forms manually, make sense of them, and present findings to leadership.

Needless to say, this is an arduous and slow process and simply not frequent enough.

I started toying around with some AI tools to see how we could make this process faster. I thought I’d share my results with the community.

For the purposes of this example, I’m going to focus on analyzing feedback on G2 - a third-party review site. You could apply the same technique to any other review repository like TrustRadius or Capterra.

Step 1: Scraping Reviews

G2 doesn’t allow vendors to export their reviews sadly. Therefore, I used a tool called Browse AI. It has a module to extract structured that allows you to plug in a target URL, highlight areas on the page that you want to scrape, and then download a CSV.

Yes, I’m aware that such tools existed before and I’ve used plenty. However, my experience with Browse AI was that the process of setting up the scraper and the scraper’s accuracy was far better.

(I’m using Zapier’s review page below as an example)

Tip: Browse AI will give you an option to capture the text from a div element as well as the HTML. In addition to capturing text elements, make sure you also capture the entire HTML of one list item to allow you to manipulate non-textual items e.g. I wasn’t able to scrape the star ratings in each review because it’s an image. However, capturing its HTML came in handy (as I’ll show you in a minute).

Once you test the scraper, you can repeat the process for as many pages as you want to capture.

After you run, it’ll bring you to a table like below where you can verify the scraped content. Boom. Hit download and get your CSV.

Don’t worry if certain columns aren’t perfect.

Step 2: Analyzing the CSV

I tried analyzing the CSV on both Anthropic’s Claude and ChatGPT’s Advanced Data Analysis Tool.

In my humble opinion, Claude was quick to understand what I was trying to do and produced better responses. While ChatGPT offered better formatting, it would focus a lot more on word analysis which necessitated a lot more course-correcting prompts.

We’ll use Claude for the purposes of this example.

Drop your CSV in and see if Claude understood it.

Now, my CSV file had a few issues. The job title column had a few glitches and I didn’t have text values for the star ratings, as mentioned before.

So, I asked Claude to clean that up for me:

Cool. Now that’s sorted, I can move into analysis.

Step 3: Analyze the feedback

Let’s warm up with a general prompt:

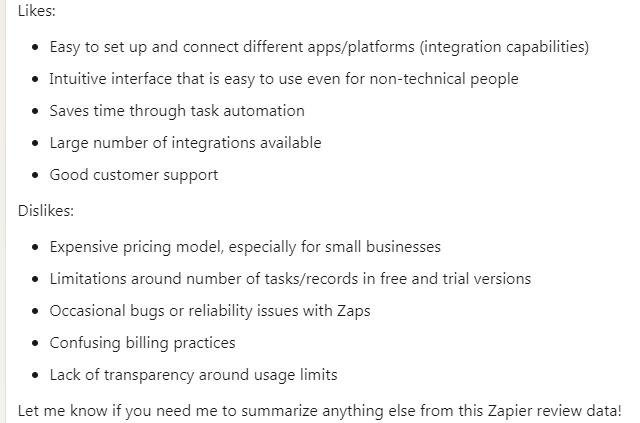

Prompt: Give me a high-level summary of the likes and dislikes of Zapier in bullet points.

OK. But am I sure it’s not hallucinating? To be sure, I asked it to give me the exact review excerpts as well:

I'd like you to list down the likes and dislikes again, but this time substantiate each with an actual excerpt from the reviews.

Next up, I wanted to start segmenting the ratings by company type.

Prompt: I'd like you to give me the average star rating for each company size bracket, along with specific tactical ideas of how we can improve the rating for each group.

Then, I wanted to analyze it by features or problem themes the platform deals with and if there was a trend in the end user persona issuing that feedback.

Prompt: Summarize the positive and negative comments by feature themes (e.g. integrations etc.)? Also, for each feature theme, identify if there was a trend in terms of the reviewer's job title or company sizes that were issuing similar feedback.

From thereon, I fiddled with the prompts below to get more insights into the data set:

What do mid-market companies think about Zapier’s solutions?

How do customers compare Zapier’s features to those of competitors or alternative solutions?

What are the common praises or criticisms regarding Zapier’s customer support service?

Are there any mentions of Zapier’s pricing? Do customers find it cost-effective or expensive?

What do customers have to say about the automation capabilities of Zapier?

What do customers have to say about their initial experience with setting up or implementing Zapier?

Are there mentions of the API capabilities of Zapier? Do users find it flexible for their needs?

Do customers feel that Zapier is scalable and able to grow with their business needs?

Are there comments on the uptime and reliability of the Zapier APIs?

Are there references to specific negative incidents that we should investigate further with the support team?

The best part?

You can repeat the process with your competitors as well to quickly identify where they are falling short and where their strengths lie.

If there are sufficient reviews, product marketers can re-use the same process to analyze user segmentation and sentiments to ideate on differentiated messaging.

What value can you add to the team as a new Product Manager in your first 30 days?

Onboarding as a Product Manager to gain context and history is important.

However, the first 30 days as a PM shouldn't be about observing from the sidelines. A new PM should still find ways to start "delivering" meaningful contributions.

This "agency" signals to the manager and team that you're wasting no time in bringing in value. In my case, it also helped counter Impostor syndrome.

Below are some ideas to hit the ground running. One doesn't need to do them all (some might already be in place):

1. Journal product learnings.

You’re already looking into the what, why, how, and who behind the product. You might as well document them and share them.

Some ideas for documents you can start working on:

- Table of ICPs + pain points.

- How we sell the product.

- Acronym list.

- List of metrics we care about.

- Domain-specific knowledge.

- Flowchart a complex process or underlying logic.

- Develop a diagram for system components.

Image credit: Visual Paradigm

2. Spin up a process wiki.

As you integrate yourself into the team, you’ll pick up on the ticketing workflow, the flavor of “agile” they use, staging-production SOPs, security policies, etc.

Create a running doc where you record all of this. Not only does this help you get familiar with the processes, it also serves as a handy onboarding doc for future hires.

3. Support the in-flight sprint.

Open up the ticket list and see what engineers and designers need help with.

If there were requirements that are vague, jump in to help create clarity.

Volunteer to QA the sprint and file bugs.

4. Plot out competitors

Focus on the top three and when possible, attempt to use their product:

Figure out and document answers to these:

- What’s their primary target audience?

- What pain points do they solve?

- High-level feature comparison

- Pricing differences

5. Summarize insights from internal/external signals.

As you collect insights about the product and users, sum them up and democratize them.

Ex:

- Use the first part of this post to analyze G2/Capterra reviews with AI.

- Repeat the same with competitor reviews.

- Use a similar process to analyze support tickets & categorize common issues.

Record a Loom video to explain your take.

6. Clean up the dashboard.

Work with the manager to identify what metrics indicate success.

- If there is already a Mixpanel or GA4, clean up the dashboard.

- If reports are located in different locations, fire up a Data Studio to consolidate them into one view.

- If they aren’t readily available, identify who can help extract those metrics.

7. Compose an End-of-week memo.

Keep your manager apprised of your week’s activities:

- Status of existing sprint

- What you learned this week

- Roadblocks

- Goals for next week

Tip: Schedule it for a Monday morning so that it doesn’t get buried.

8. Customer notes

If possible, work your way to get into customer calls with sales or customer success as a silent spectator. Create a shared spreadsheet documenting your learnings about objections and pain points.

Till next time,

Aatir

Very thoughtful to check against hallucination! This is what many people miss in such analyses.

In the first section, it appears you also did this analysis on Zendesk? ;) Great content as always!